Quick Tips on Parsing Logs

Understanding Unstructured Log Formats

Unstructured logs lack predictable formatting. They may include untagged text, inconsistent timestamps, irregular delimiters, multi-line events, or proprietary formats. Engineers must first collect representative samples and identify patterns before building a parser.

2. Parsing Methods

Common parsing techniques include:

- – Regex-based extraction

- – Grok patterns

- – Delimiter-based parsing

- – JSON transformation (preferred)

3. Normalization

Normalization maps raw fields into a standardized schema such as Splunk CIM, Microsoft ASIM, Elastic ECS, or QRadar DSM. Normalized fields support correlation, dashboards, and MITRE ATT&CK-based detections.

4. Enrichment

Parsed data can be enriched with external and internal sources: GeoIP, threat intelligence, Active Directory metadata, CMDB asset records, and identity provider context. Enrichment enhances detection accuracy.

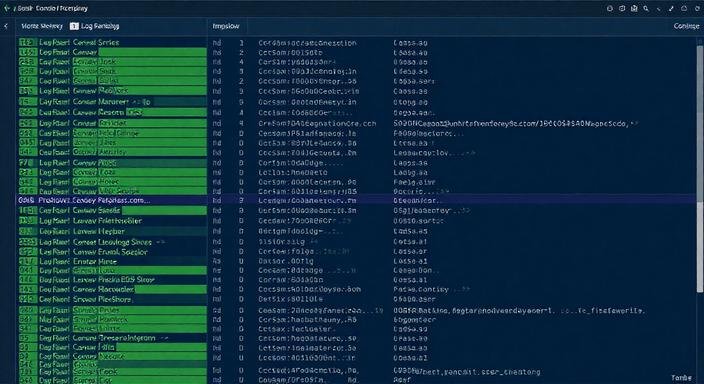

5. SIEM-Specific Parsing Examples

Splunk Example

Example regex extraction:

“`

LOGIN EVENT – user (?P<username>[a-zA-Z0-9.-_]+) attempted login from (?P<src_ip>d+.d+.d+.d+) at (?P<timestamp>[d-:s]+) status=(?P<status>[A-Z]+) reason: (?P<reason>.*)

“`

Microsoft Sentinel Example (Kusto)

Using parse operator:

“`

CustomLog

| parse RawData with “LOGIN EVENT – user ” username ” attempted login from ” src_ip ” at ” timestamp ” status=” status ” reason: ” reason

“`

QRadar Example

QRadar uses DSM Editor or Custom Properties:

– Define a Regex Property

– Test extraction on sample payloads

– Map extracted properties to QRadar fields

Elastic / Logstash Example

Grok pattern example:

“`

grok {

match => { “message” => “LOGIN EVENT – user %{USERNAME:username} attempted login from %{IP:src_ip} at %{TIMESTAMP_ISO8601:timestamp} status=%{WORD:status} reason: %{GREEDYDATA:reason}” }

}

“`